Troubleshooting usage of Python's multiprocessing module in a FastAPI app

Technology puzzles us sometimes, but we press on…

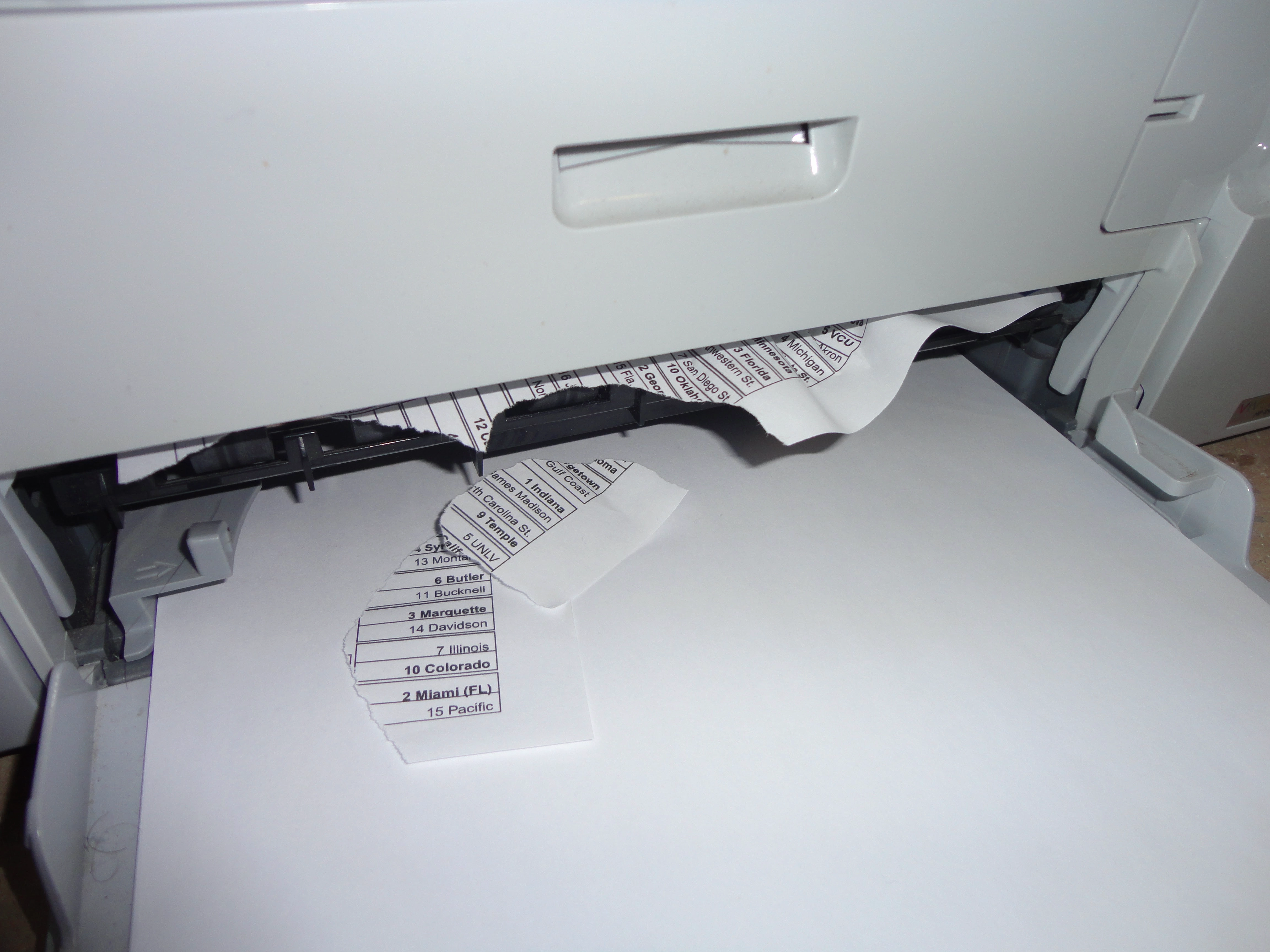

I’m in the process of developing a big upgrade to my March Madness model for this year’s NCAA basketball tournament. Before, it was basically a simple Excel spreadsheet ported to Python. What I’m aiming for this year is a full-blown simulation of each game based on the logical flow of a basketball game (for example, possessions generally either end in shots or turnovers; if there’s a shot, check to see if it was made or there will be a rebound situation; etc.).

Of course, one simulation is never enough! So I’m hoping to loop through a few hundred/thousand times per matchup to get a nice distribution of outcomes to analyze. Once I had this thought, it was time to start looking for how to utilize all of the CPU power I have access to. (This will vary depending on whether I’m testing locally on my gaming PC, or when the site is on Google Cloud Run once I push this to “production”…side note, I always feel a little weird calling my personal website a “production” build. Makes it feel like work when it’s more like a passion project.)

Python’s multiprocessing module was a great place to start. From what I can tell, by default any Python code is just going to run in a single core…but many of us are working on machines with multiple cores in 2021! So with a couple of simple lines of code, you can make sure that you’re utilizing as many cores on your machine as you want:

with multiprocessing.Pool(processes=8) as p:

results = p.map(run_simulation, simulations)You can check out the full code on GitHub, but basically in this context run_simulation is the function that will do exactly what it says, and simulations is a list of Pandas DataFrames that will be populated with results of each simulated game. This code basically kicks off eight separate run_simulation routines at the same time, vs. having to loop through each one individually!

However, I didn’t get here without a considerable amount of troubleshooting this week…somewhere along the way in testing I noticed that every time I called my new simulation endpoint, one of the Gunicorn workers running my website would die, then restart:

[2021-02-06 12:19:04 -0500] [7150] [INFO] Shutting down

[2021-02-06 12:19:04 -0500] [7150] [INFO] Error while closing socket [Errno 9] Bad file descriptor

[2021-02-06 12:19:04 -0500] [7150] [INFO] Finished server process [7150]

[2021-02-06 12:19:04 -0500] [7150] [INFO] Worker exiting (pid: 7150)

[2021-02-06 12:19:06 -0500] [7358] [INFO] Booting worker with pid: 7358

[2021-02-06 12:19:07 -0500] [7358] [INFO] Started server process [7358]

[2021-02-06 12:19:07 -0500] [7358] [INFO] Waiting for application startup.

[2021-02-06 12:19:07 -0500] [7358] [INFO] Application startup complete.I’m not going to pretend to entirely know what’s going on here! But from what I’ve gathered, there’s generally something not kosher about running multiprocessing within a process that is itself using multiprocessing. After trying answer after answer after answer after answer from Google on how to successfully implement multiprocessing alongside my website stack, last night I had finally resigned myself to giving up on multiprocessing entirely and going with a different approach.

Maybe it was the iced coffee this morning, but I tried one last desperate Google search and stumbled across this gem in the FastAPI issues from jongwon-yi:

Describe the bug

Make a multiprocessing Process and start it. Right after terminate the process, fastapi itself(parent) terminated.

Sounds similar enough to my issue, but it’s currently Open…maybe this is still unresolved. But wait! The very last update on the issue, from Mixser, suggests that this can be fixed with a single line of code:

multiprocessing.set_start_method('spawn')Python’s docs on the “spawn” start method:

The parent process starts a fresh python interpreter process. The child process will only inherit those resources necessary to run the process object’s

run()method. In particular, unnecessary file descriptors and handles from the parent process will not be inherited. Starting a process using this method is rather slow compared to using fork or forkserver.

Slow? Probably don’t care, as long as it works! Maybe we can figure out why “fork” (the default for Unix) wasn’t working by reading that section as well? (I added emphasis to what I believe is the key):

The parent process uses

os.fork()to fork the Python interpreter. The child process, when it begins, is effectively identical to the parent process. All resources of the parent are inherited by the child process. Note that safely forking a multithreaded process is problematic.

So…the inner workings are still way beyond true understanding for me. But I think that based on a combination of Mixser’s answer and the Python docs, I was trying to do something pretty sketchy by forking the same Python interpreter running my website to also run these multiple simulations. Starting a totally clean Python interpreter using “spawn” may be a little slower, (so far I’ve noticed just a couple of extra seconds in testing), but workers are no longer crashing!

I wanted to share this experience now because even though the simulation itself is still nowhere near fully-baked in terms of an interactive web app, I think it’s totally normal to go through these sorts of “progress droughts” that I went through this week. When I got totally stuck or frustrated, I took breaks, focused on work instead, played some games…just tried to generally mix it up until a workable solution finally came to me. I think in hindsight, these moments of getting stuck and then finally unstuck will appear just as valuable as that ultimate moment of triumph when the app is finally in a presentable state again!

Thanks for reading — feel free to share any perspectives you have, technical or otherwise!